Displaying items by tag: ibm

Introduction

Configuration Management has always been a difficult problem to solve, and it tended to get more and more difficult as the complexity of the products increased. As end-user expectations evolve, development organizations require more custom and tailored solutions. This introduces a necessity of effective management of versions and variants, which introduces more sophistication to the problem. The solution to being able to manage a complex product development lifecycle in this sense also requires a solution in a similar sophistication level.

The complexity of the end-products and being able to be tailored for the end user are not the only concerns for system developers. What about increasing competitive pressures, efficiency, etc.? These also have increasing importance when considering developing a system.

Challenges in Product Development

Of course, when we talk about developing a system, we should think about how companies will be able to develop multiple products, share common components, and manage variants, product lines, and even product families, especially if this system needs to be able to answer many different types of user profiles, expectations, needs, and so on.

One main concern for such companies is how to optimize the reuse of assets across such product lines or product families for effective and efficient development processes. Such reusability may introduce new risks without being governed by effective tools and technologies. For instance, a change to a component in one variant or one release cycle, may not be propagated correctly, completely, and efficiently to all other recipient versions and variants.

This may result in redundancy, error-prone manual work, and conflicts. We do not want to end up in this situation while seeking solutions for managing product lines, families, versions, and variants. In this article, we would like to provide a brief explanation of how IBM Engineering Lifecycle (ELM) Solution tackles such challenges by enabling a cross domain configuration management solution across requirements, system design, verification and validation, and all the way down to software source code management.

This solution, Global Configuration Management (GCM), is an optional application to the ELM Solution, which integrates several products to provide a complete set of applications for software or systems development.

The Solution

Global Configuration Management (GCM) offers the ability to reuse engineering artifacts from different system components with different lifecycle statuses across multiple parts of the system. Stakeholder, system, sub-system, safety, software, hardware requirements, verification and validation scenarios, test cases, test plans, system architectural design, simulation models, etc. can be reused as well as configured from a single source of truth for different versions and variants. Each of these information sources above has their own configuration identification as per their domain and lifecycle. One main challenge is to combine all these islands of information into one big configuration.

Fig1

In the example above, there are 3 global configurations:

- A configuration which was sent to manufacturing, the initial phase of the ACC (Adaptive Cruise Control)

- A configuration where the identified issues are being addressed (mainly to fix some software bugs)

- And a configuration on which engineering teams are currently working on to release the next functionally enriched revision.

All these configurations include a certain set of requirements; test cases relevant to those requirements which will be used to verify the requirements; a system architecture parts which satisfies the requirements and will be used during the verification process; and if the system also includes any software the relevant source code to be implemented.

Of course, the sketch above can be extended to a full configuration of an end product, for instance a complete stand alone system like an automobile. In this case, we define a component hierarchy to represent the car. For a reference on component structures for global configurations the article from IBM here can be used.

In such applications of GCM a combination of components of the system and streams can be used to as follows to define the whole system:

Fig 2: Image from article: "CLM configuration management: Defining your component strategy" - https://jazz.net/library/article/90573

In such examples, the streams represented as right arrows on an orange background (global stream: ) correspond to a body part of the system and is constituted by several configurations of relevant domains. For instance, an “air pressure sensor” is defined by a set of requirements for this part, as well as a system design for the sensor and its test cases to verify the requirements. All these domain level, local configurations correspond to different information assets. The global configuration can be defined in such a way that if a different configuration is defined for Ignition System ECU (different baseline is selected for Ignition System ECU Requirements, Test Plans and Cases and Firmware) we end up with a different variant of the system. This effect is illustrated in the figure below:

Fig 3: Developing 3 different variants at the same time, facilitating reusability.

We may have a base product and different variants being developed to this base product. The variants may share considerable commonality, and they are based on a common platform. This platform is considered as a collection of engineering assets like requirements, designs, embedded software, test plans and test cases etc.

Effective reuse of the platform engineering assets is achieved through the Global Configuration Management application. Via GCM, ELM can efficiently manage sharing of engineering artifacts and facilitate effective variant management. While a standard variant (or we can call it also as Core Platform) is being developed (see Fig 3), some artifacts constituting specifications, test cases, system models and source code for sub systems, components or sub-components can be directly shared and reused, while others may undergo small changes to make a big difference. Sometimes there might be a fix that surfaces in one variant and should be propagated through other variants as well because we may not know if that variant is affected by this issue or not. Such fixes can easily and effectively be propagated through all variants making sure that the issue is addressed on those variants as well. This is because all artifacts regardless of being reused or changed, are based on one single source of truth and share the same basis. This makes comparison of configurations (variants) very easy and just one click away.

ELM with GCM manages strategic reusability by facilitating parallel development of the “Standard Variant” (Core Platform) and allows easy and effective configuration comparison and change propagation across variants.

Tips and Best Practices for GCM

The technology and implementation behind GCM are very enchanting. Talking about developing systems, especially for safety-critical systems, a two-way linking of information is required. Information here is any engineering artifact, from requirements to tests, reviews, work items, system architecture, and code changes.

So one should maintain a link from source to target and also the backward traceability should be satisfied from target to source. How is this possible with variants? There must be an issue here, especially when talking about traceability between different domains, say between requirements and tests.

To be more specific, let's look at a simple example:

In this example, we have two variants. The initial variant is made of one requirement and a test case linked to that requirement. Namely, "The system shall do this," and the test case is specifying how to verify this. The link between the requirement and the test case is valid (green connection). Now, in the second variant, we have to update the requirement. Because the system shall do this under a certain condition. Both requirements have the same id. They are two different versions of the same artifact. We can compare these configurations and see how the specification has changed from version 1 to version 2. But now, what will happen to the link? Is it still valid? Is the test case really testing the second version of the requirement? We have to check. That is why the link is not valid anymore in the second variant.

The team working on the first variant sees that the test case exactly verifies version 1 of the requirement. But the team working on the second variant is not sure. There is a suspect between the requirement and the test case because the requirement has changed. How is this possible with bidirectional links? Actually, it is not. Because it is supposed to be the same link as well, like it is the same version of the requirement. So we also kind of version control the links. However, if we anchor both sides of the links to the different artifacts on different domains, creating two instances for the same link is not possible. ELM enables this by deleting the reverse direction of links when Global Configuration is enabled and maintains the backward links via indices. This is the beauty of this design. Cause links from source to target exist but, looking backwards, are maintained by link indices, which makes the solution very flexible for defining variants and having different instances of links between artifacts among different variants.

There are some considerations to make when enabling Global Configurations in projects. The main question I ask to decide whether to enable it or not is, do I need to manage variants? How many variants? Is reusability required? Answers to these questions will shed light on the topic without any second thoughts.

Then what is a component, how granular should it be, how to decide on streams and configurations. What domains should be included in GC, when to baseline, definition of a taxonomy for system components. Would also be typical questions on designating the global configuration environment. The discussion of this topic requires a separate article to focus on. Let's leave that for next time, then.

Conclusion

IBM ELM’s Global Configuration Management (GCM) is a very specific solution for Version and Variant Management and is designed to enable Product Line Engineering, by facilitating effective and strategic reuse.

With GCM implemented, the difficulties around sharing changes among different variants disappear, and every change can be easily and effectively propagated among different product variants.

GCM mainly helps organizations reduce time to market and cost by reducing error prone manual and redundant activities.

It also improves product quality by automating the change management process which can be used by multiple product development teams and multiple product variants.

References

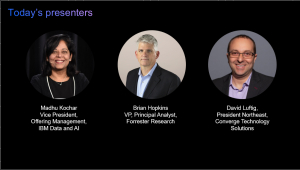

With emerging technologies in Edge, Digital Twin, Asset Performance Management (APM) and AI/ML, how can your organization justify their business value and make the case for investment? What are the new measures and KPIs your organization should be tracking to reinforce the justification of these emerging technologies?

Join us to learn how we can help you make the business case for 21st Century maintenance and reliability.

Tom Woginrich is a Principal Value Consultant in the IBM Sustainability Software division. He is a subject matter expert in Asset Management best practices and has worked with many companies to transform business processes and implement technology improvements. Mr. Woginrich has over 30 years of Asset Management experience leading and working in large enterprises. He has a broad range of industry experience that includes Pulp & Paper, Energy & Utilities, Chemicals & Petroleum, Automotive, Oil & Gas, Life Sciences/Pharmaceuticals, Facilities, and Semiconductor Manufacturing.

His working experience and knowledge in operations, field services and maintenance give him a very broad comprehension of the total asset management lifecycle and the value opportunities contained within. It is this background, together with his experience managing and deploying technology, that helps him facilitate the business value case conversation that should accompany technology implementations.

Location

Leif Davidsen - Program Director, Product Management, Cloud Pak for Integration

Leif Davidsen is the Product Management Program Director for IBM Cloud Pak for Integration. Leif has worked at IBM's Hursley Lab since 1989, having joined with a degree in Computer Science from the University of London.

Andy Garratt - Technical Product Manager, IBM Cloud Pak for Integration

Andy Garratt is an Offering Manager for IBM’s Cloud Pak for Integration, based in IBM’s Hursley labs in the UK. He has over 25 years’ experience in architecting, designing, buildingand implementing integration solutions for customers around Europe and around the world and loves to meet users, IBMers, partners and customers to find out about all the great things they’re doing with IBM integration products.

Over the last few months, we’ve seen the role of AI grow from an important technology tool to truly a force for good. From automating answers to symptom and other citizens questions to finding insights in public data, AI has helped people deal with an unprecedented challenge at a scale never before seen.

But as organizations and individuals emerge from this pandemic, we see the role of AI continuing to be critical – not only to respond to what’s happening today, but to plan for an uncertain future.

Join our panel of AI experts and business leaders as they share key learnings from the past few months and what organizations can do to prepare for and manage future changes with AI.

More information here

This is session 7 of 7 that covers the IBM ELM tool suite.

IBM Engineering Lifecycle Management (ELM) is the leading platform for today’s complex product and software development. ELM extends the functionality of standard ALM tools, providing an integrated, end-to-end solution that offers full transparency and traceability across all engineering data. From requirements through testing and deployment, ELM optimizes collaboration and communication across all stakeholders, improving decision- making, productivity and overall product quality.

Presented by: Jim Herron of Island Training.

Our solution engineer will walk you through the product and how easy Instana makes it to:

- Automate discovery, mapping, and configuration with zero human interaction

- Use AIOps to establish a baseline of application performance

- Analyze metrics, traces, and logs

- Trace every request, with no sampling

- Monitor hybrid cloud and mobile environments

Purpose:

Applications power businesses. When they run well, your customers have a great experience, and your development and infrastructure teams remain focused on their top initiatives. In today’s world, applications are becoming more distributed and dynamic as enterprises embrace new development methodologies and microservices. Simultaneously, applications are increasingly being deployed across complex hybrid and multicloud environments.

It has never been more challenging to assure applications deliver exceptional customer experiences that drive positive business results and beat the competition. Application architecture and design must be well executed, and the underlying infrastructure must be resourced to support the real-time demands of the application. The combination of Instana and Turbonomic provides higher levels of observability and trusted actions to continuously optimize and assure application performance.

About Instana and Turbonomic:

Instana: Enterprise Observability Platform – Automatically discover, map and visualize the full application & technology stack in real-time

Turbonomic: Application Resource Management - Continuously assure application performance. Applications get the resources they need when they need them as demand fluctuates

When: Oct 13, 2022 from 09:00 AM to 10:00 AM (PT)

The AUTOSAR Adaptive platform standard has been introduced to cope with the complex software-driven functionality of vehicles, of which autonomous driving is probably the most prominent one. In this webinar, we will look into the workflows implementing AUTOSAR Adaptive components, and we will discuss how AUTOSAR Classic and Adaptive workflows can be combined. OEM and supplier workflow will be highlighted. Furthermore, we will also discuss migration strategies from AUTOSAR Classic to Adaptive.

AUTomotive Open System ARchitecture (AUTOSAR) is a development partnership of automotive interested parties founded in 2003. It pursues the objective to create and establish an open and standardized software architecture for automotive electronic control units (ECUs). Goals include the scalability to different vehicle and platform variants, transferability of software, the consideration of availability and safety requirements, a collaboration between various partners, sustainable use of natural resources, and maintainability during the product lifecycle.

IBM Engineering Lifecycle Management (ELM) is the leading platform for today’s complex product and software development. ELM extends the functionality of standard ALM tools, providing an integrated, end-to-end solution that offers full transparency and traceability across all engineering data. From requirements through testing and deployment, ELM optimizes collaboration and communication across all stakeholders, improving decision- making, productivity and overall product quality.

Speaker:

Moshe Cohen - Senior Offering/Product Manager - Engineering Lifecycle Management

Walter van der Heiden - Owner/CTO

Register here

Oct 6, 2022 from 10:00 AM to 12:00 PM (CT)

Join this session to learn and discover how your organization can optimize utilization of infrastructure with optimal customer experience

In this session you will learn how to:

- Unlock the true potential of cloud native and application modernization investments

- Achieve performance goals in a way that is both financially and environmentally sustainable

- Leverage cloud optimization decisions to ensure a superior digital experience for your customers

- Drive desired business outcomes while providing a better end-user experience